Systematic Review Vs Meta Analysis Vs Literature Review

Talk over alternative approaches to integrating inquiry prove and advantages to using systematic methods

Depict fundamental decisions and steps in doing a meta-analysis and metasynthesis

Depict fundamental decisions and steps in doing a meta-analysis and metasynthesis

Critique primal aspects of a written systematic review

Critique primal aspects of a written systematic review

Define new terms in the chapter

Define new terms in the chapter

Primal Terms

Event size (ES)

Event size (ES)

Forest plot

Forest plot

Frequency consequence size

Frequency consequence size

Intensity issue size

Intensity issue size

Manifest outcome size

Manifest outcome size

Meta-analysis

Meta-analysis

Meta-ethnography

Meta-ethnography

Meta-summary

Meta-summary

Metasynthesis

Metasynthesis

Primary study

Primary study

Publication bias

Publication bias

Statistical heterogeneity

Statistical heterogeneity

Subgroup analysis

Subgroup analysis

Systematic review

Systematic review

In Chapter 7, we described major steps in conducting a literature review. This chapter also discusses reviews of existing evidence merely focuses on systematic reviews, especially those in the grade of meta-analyses and metasyntheses. Systematic reviews, a cornerstone of testify-based practice (EBP), are inquiries that follow many of the same rules every bit those for master studies, i.eastward., original research investigations. This affiliate provides guidance in helping you lot to sympathise and evaluate systematic research integration.

Inquiry INTEGRATION AND SYNTHESIS

A systematic review integrates research evidence about a specific research question using careful sampling and information collection procedures that are spelled out in accelerate. The review process is disciplined and transparent so that readers of a systematic review tin assess the integrity of the conclusions.

Twenty years agone, systematic reviews ordinarily involved narrative integration, using nonstatistical methods to synthesize enquiry findings. Narrative systematic reviews continue to be published, but meta-analytic techniques that use statistical integration are widely used. Most reviews in the Cochrane Collaboration, for instance, are meta-analyses. Statistical integration, even so, is sometimes inappropriate, as we shall see.

Qualitative researchers accept as well adult techniques to integrate findings beyond studies. Many terms exist for such endeavors (e.m., meta-study, meta-ethnography), merely the one that has emerged as the top term is metasynthesis.

The field of inquiry integration is expanding steadily. This chapter provides a brief introduction to this important and complex topic.

META-ANALYSIS

Meta-analyses of randomized controlled trials (RCTs) are at the peak of traditional evidence hierarchies for Therapy questions (see Fig. 2.1). The essence of a meta-analysis is that findings from each report are used to compute a common index, an outcome size. Consequence size values are averaged beyond studies, yielding data about the relationship betwixt variables beyond multiple studies.

Advantages of Meta-Analyses

Meta-analysis offers a unproblematic advantage as an integration method: objectivity. It is hard to describe objective conclusions about a body of bear witness using narrative methods when results are inconsistent, equally they often are. Narrative reviewers brand subjective decisions nearly how much weight to give findings from different studies, and so different reviewers may achieve different conclusions in reviewing the aforementioned studies. Meta-analysts brand decisions that are explicit and open to scrutiny. The integration itself also is objective considering it uses statistical formulas. Readers of a meta-analysis tin be confident that another analyst using the same information set and analytic decisions would come to the same conclusions.

Another advantage of meta-analysis concerns ability, i.eastward., the probability of detecting a truthful relationship between variables (see Chapter 14). Past combining effects across multiple studies, power is increased. In a meta-analysis, it is possible to conclude that a relationship is existent (e.g., an intervention is effective), fifty-fifty when several small studies yielded nonsignificant findings. In a narrative review, 10 nonsignificant findings would almost surely exist interpreted equally lack of prove of a truthful effect, which could be the wrong conclusion.

Despite these advantages, meta-analysis is not always appropriate. Indiscriminate use has led critics to warn against potential abuses.

Criteria for Using Meta-Analytic Techniques in a Systematic Review

Reviewers need to make up one's mind whether statistical integration is suitable. A bones criterion is that the research question should be virtually identical across studies. This ways that the contained and dependent variables, and the study populations, are sufficiently similar to merit integration. The variables may be operationalized differently to be sure. Nurse-led interventions to promote healthy diets among diabetics could be a 4-week clinic-based program in one study and a six-week home-based intervention in another, for example. However, a study of the effects of a 1-hour lecture to discourage eating "junk food" amongst overweight adolescents would exist a poor candidate to include in this meta-analysis. This is frequently referred to every bit the "apples and oranges" or "fruit" problem. Meta-analyses should not exist about fruit— i.e., a broad category—but rather about "apples," or, even better, "Granny Smith apples."

Another benchmark concerns whether there is a sufficient knowledge base of operations for statistical integration. If in that location are only a few studies or if all of the studies are weakly designed, it usually would not make sense to compute an "average" result.

I other issue concerns the consistency of the evidence. When the same hypothesis has been tested in multiple studies and the results are highly alien, meta-analysis is likely not appropriate. As an extreme instance, if half the studies testing an intervention found benefits for those in the intervention grouping, but the other half establish benefits for the controls, it would exist misleading to compute an average event. In this state of affairs, it would be meliorate to do an in-depth narrative analysis of why the results are conflicting.

Example of inability to conduct a meta-analysis

Langbecker and Janda (2015) undertook a systematic review of interventions designed to amend information provision for adults with chief brain tumors. They had intended to undertake a meta-assay, " . . . but the heterogeneity in intervention types, outcomes, and study designs ways that the data were unsuitable for this" (p. 3).

Steps in a Meta-Analysis

We brainstorm by describing major steps in a meta-analysis so that yous can understand the decisions a meta-annotator makes—decisions that bear upon the quality of the review and need to exist evaluated.

Problem Formulation

A systematic review begins with a problem statement and a research question or hypothesis. Questions for a meta-analysis are ordinarily narrow, focusing, for example, on a particular blazon of intervention and specific outcomes. The conscientious definition of key constructs is disquisitional for deciding whether a primary study qualifies for the synthesis.

Example of a question from a meta-analysis

Dal Molin and colleagues (2014) conducted a meta-assay that addressed the question of whether heparin was more effective than other solutions in catheter flushing among adult patients with central venous catheters. The clinical question was established using the PICO framework (encounter Chapter 2). The Population was patients using central venous catheters. The Intervention was use of heparin in the flushing, and the Comparison was other solutions (e.g., normal saline). Outcomes included obstructions, infections, and other complications.

A strategy that is gaining momentum is to undertake a scoping review to refine the specific question for a systematic review. A scoping review is a preliminary investigation that clarifies the range and nature of the evidence base, using flexible procedures. Such scoping reviews tin suggest strategies for a total systematic review and can also signal whether statistical integration (a meta-assay) is feasible.

The Blueprint of a Meta-Analysis

Sampling is an of import design event. In a systematic review, the sample consists of the primary studies that take addressed the research question. The eligibility criteria must exist stated. Substantively, the criteria specify the population (P) and the variables (I, C, and O). For instance, if the reviewer is integrating findings about the effectiveness of an intervention, which outcomes must the researchers take studied? With regard to the population, will (for example) sure historic period groups be excluded? The criteria might also specify that just studies that used a randomized design will exist included. On practical grounds, reports not written in English might be excluded. Another determination is whether to include both published and unpublished reports.

Example of sampling criteria

Liao and coresearchers (2016) did a meta-analysis of the effects of massage on claret pressure in hypertensive patients. Primary studies had to be RCTs involving patients with hypertension or prehypertension. The intervention had to involve massage therapy, compared to a control condition (e.g., placebo, usual care, no handling). To be included, the studies had to have claret force per unit area measurements as the primary outcome. Trials were excluded if they were non reported in English.

Researchers sometimes apply study quality as a sampling criterion. Screening out studies of lower quality can occur indirectly if the meta-analyst excludes studies that did not use a randomized design. More directly, each potential primary study can exist rated for quality and excluded if the quality score falls below a threshold. Alternatives to dealing with study quality are discussed in a later department. Suffice it to say, however, that evaluations of written report quality are role of the integration process, and so analysts need to decide how to assess quality and what to practice with assessment information.

Another design issue concerns the statistical heterogeneity of results in the chief studies. For each written report, meta-analysts compute an alphabetize to summarize the force of human relationship betwixt an contained variable and a dependent variable. Just every bit in that location is inevitably variation within studies (not all people in a study take identical scores on outcomes), then in that location is inevitably variation in effects across studies. If the results are highly variable (e.g., results are alien across studies), a meta-analysis may exist inappropriate. But if the results are moderately variable, researchers can explore why this might exist and so. For example, the effects of an intervention might be systematically different for men and women. Researchers often program to explore such differences during the design stage of the project.

The Search for Testify in the Literature

Many standard methods of searching the literature were described in Chapter 7. Reviewers must decide whether their review volition comprehend published and unpublished findings. In that location is some disagreement well-nigh whether reviewers should limit their sample to published studies or should bandage as wide a net as possible and include grayness literature—that is, studies with a more limited distribution, such as dissertations or unpublished reports. Some people restrict their sample to reports in peer-reviewed journals, arguing that the peer review arrangement is a tried-and-truthful screen for findings worthy of consideration as show.

Excluding nonpublished findings, however, runs the take a chance of biased results. Publication bias is the tendency for published studies to systematically overrepresent statistically meaning findings. This bias (sometimes called the bias against the cipher hypothesis) is widespread: Authors may refrain from submitting manuscripts with nonsignificant results, reviewers and editors tend to reject such reports when they are submitted, and users of prove may ignore the findings if they are published. The exclusion of greyness literature in a meta-analysis can lead to the overestimation of furnishings.

Meta-analysts can employ various search strategies to locate grey literature in addition to the usual methods for a literature review. These include contacting key researchers in the field to see if they take done studies (or know of studies) that have not been published, hand searching the tables of contents of relevant journals, and reviewing abstracts from briefing proceedings.

| TIP There are statistical procedures to detect and right for publication biases, but opinions vary nigh their utility. A brief caption of methods for assessing publication bias is included in the supplement to this chapter on the |

Example of a search strategy from a systematic review

Al-Mallah and colleagues (2016) did a meta-analysis of the effect of nurse-led clinics on the mortality and morbidity of patients with cardiovascular diseases. Their search strategy included a search of viii bibliographic databases, scrutiny of the bibliographies of all identified studies, and a paw search of relevant specialized journals.

Evaluations of Study Quality

In systematic reviews, the evidence from chief studies needs to be evaluated to assess how much confidence to place in the findings. Rigorous studies should be given more than weight than weaker ones in coming to conclusions nigh a body of evidence. In meta-analyses, evaluations of written report quality sometimes involve overall ratings of evidence quality on a multi-item scale. Hundreds of rating scales exist, only the use of such scales has been criticized. Quality criteria vary from instrument to instrument, and the result is that study quality can be rated differently with different cess scales—or past dissimilar raters using the same scale. Likewise, when an overall scale score is used, the meaning of the scores oftentimes are not transparent to users of the review.

The Cochrane Handbook (Higgins & Light-green, 2008) recommends a domain-based evaluation, that is, a component approach, every bit opposed to a scale approach. Individual blueprint elements are coded separately for each study. So, for example, a researcher might code for whether randomization was used, whether participants were blinded, the extent of compunction from the report, and then on.

Quality assessments of main studies, regardless of approach, should be done by 2 or more qualified individuals. If at that place are disagreements between the raters, there should be a discussion until a consensus has been reached or until another rater helps to resolve the divergence.

Example of a quality assessment

Bryanton, Beck (one of this book's authors), and Montelpare (2013) completed a Cochrane review of RCTs testing the effects of structured postnatal education for parents. They used the Cochrane domain approach to capture elements of trial quality. The starting time two authors completed assessments, and disagreements were resolved past word.

Extraction and Encoding of Data for Analysis

The adjacent step in a meta-analysis is to excerpt and record relevant data about the findings, methods, and study characteristics. The goal is to create a data set acquiescent to statistical analysis.

Bones source information must be recorded (east.thou., year of publication, country where data were collected). Important methodologic features include sample size, whether participants were randomized to treatments, whether blinding was used, rates of compunction, and length of follow-upward. Characteristics of participants must be encoded likewise (e.k., their mean historic period). Finally, data about findings must be extracted. Reviewers must either calculate effect sizes (discussed in the next section) or must record sufficient statistical information that computer software tin compute them.

Every bit with other decisions, extraction and coding of information should be completed by two or more than people, at least for a portion of the studies in the sample. This allows for an assessment of interrater reliability, which should be sufficiently high to persuade readers of the review that the information are accurate.

Example of intercoder understanding

Chiu and colleagues (2016) conducted a meta-assay of the effects of acupuncture on menopause-related symptoms in chest cancer survivors. Two researchers independently extracted data from seven RCTs using a standard grade. Disagreements were resolved until consensus was reached.

Adding of Effects

Meta-analyses depend on the calculation of an effect size (ES) index that encapsulates in a unmarried number the relationship betwixt the contained and outcome variable in each study. Effects are captured differently depending on the measurement level of variables. The iii near mutual scenarios for meta-analysis involve comparisons of two groups such as an intervention versus a control group on a continuous consequence (e.g., blood pressure), comparisons of two groups on a dichotomous outcome (east.g., stopped smoking vs. continued smoking), or correlations between two continuous variables (due east.1000., between blood pressure and anxiety scores).

The first scenario, comparing of two group means, is especially mutual. When the outcomes beyond studies are on identical scales (eastward.g., all outcomes are measures of weight in pounds), the effect is captured past merely subtracting the mean for i group from the mean for the other. For example, if the mean postintervention weight in an intervention group were 182.0 pounds and that for a command group were 194.0 pounds, the effect size would be −8.0. Typically, nevertheless, outcomes are measured on different scales (e.g., a calibration of 0–10 or 0–100 to measure out pain). Mean differences beyond studies cannot in such situations be combined and averaged; researchers need an index that is neutral to the original metric. Cohen'southward d, the upshot size alphabetize most ofttimes used, transforms all furnishings into standard departure units. If d were computed to be .50, information technology means that the group hateful for one group was one-half a standard divergence higher than the mean for the other grouping—regardless of the original measurement scale.

| TIP The term issue size is widely used for d in the nursing literature, but the term ordinarily used for Cochrane reviews is standardized hateful difference or SMD. |

When the outcomes in the primary studies are dichotomies, meta-analysts ordinarily utilise the odds ratio (OR) or the relative risk (RR) index as the ES statistic. In nonexperimental studies, a common outcome size statistic is Pearson'south r, which indicates the magnitude and direction of effect.

Data Assay

Subsequently an effect size is computed for each report, as simply described, a pooled effect approximate is computed as a weighted average of the individual effects. The bigger the weight given to any study, the more than that written report will contribute to the weighted boilerplate. A widely used approach is to give more weight to studies with larger samples.

An of import decision concerns how to bargain with the heterogeneity of findings—i.e., differences from ane report to another in the magnitude and management of effects. Statistical heterogeneity should be formally tested, and meta-analysts should report their results.

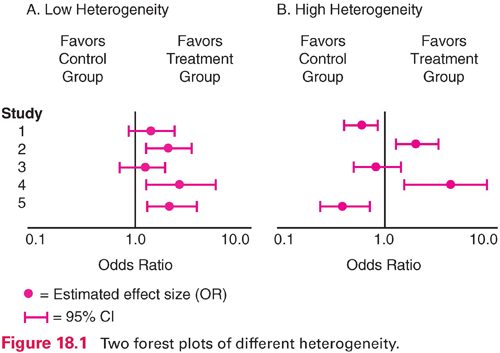

Visual inspection of heterogeneity usually relies on the construction of forest plots, which are often included in meta-analytic reports. A forest plot graphs the consequence size for each study, together with the 95% confidence interval (CI) around each estimate. Figure 18.1 illustrates wood plots for situations in which there is depression heterogeneity (panel A) and high heterogeneity (panel B) for five studies. In panel A, all five effect size estimates (here, odds ratios) favor the intervention group. The CI information indicates the intervention effect is statistically significant (does non encompass 1.0) for studies 2, 4, and five. In panel B, past contrast, the results are "all over the map." Two studies favor the control group at pregnant levels (studies 1 and 5), and 2 favor the treatment group (studies ii and four). Meta-analysis is not advisable for the situation in console B.

| TIP Heterogeneity affects not but whether a meta-assay is advisable only as well which statistical model should be used in the assay. When findings are like, the researchers may use a fixed effects model. When results are more varied, it is better to utilise a random furnishings model. Merely gold members can go along reading. Log In or Register to proceed WordPress theme by UFO themes |

Source: https://nursekey.com/systematic-reviews-meta-analysis-and-metasynthesis/

0 Response to "Systematic Review Vs Meta Analysis Vs Literature Review"

Post a Comment